RESEARCH ARTICLE

Classification of Upper Limb Motions from Around-Shoulder Muscle Activities: Hand Biofeedback

Jose González*, Yuse Horiuchi, Wenwei Yu

Article Information

Identifiers and Pagination:

Year: 2010Volume: 4

First Page: 74

Last Page: 80

Publisher Id: TOMINFOJ-4-74

DOI: 10.2174/1874431101004020074

Article History:

Received Date: 8/10/2009Revision Received Date: 25/11/2009

Acceptance Date: 25/11/2009

Electronic publication date: 28/5/2010

Collection year: 2010

open-access license: This is an open access article licensed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc3.0/) which permits unrestricted, non-commercial use, distribution and reproduction in any medium, provided the work is properly cited.

Abstract

Mining information from EMG signals to detect complex motion intention has attracted growing research attention, especially for upper-limb prosthetic hand applications. In most of the studies, recordings of forearm muscle activities were used as the signal sources, from which the intention of wrist and hand motions were detected using pattern recognition technology. However, most daily-life upper limb activities need coordination of the shoulder-arm-hand complex, therefore, relying only on the local information to recognize the body coordinated motion has many disadvantages because natural continuous arm-hand motions can’t be realized. Also, achieving a dynamical coupling between the user and the prosthesis will not be possible. This study objective was to investigate whether it is possible to associate the around-shoulder muscles’ Electromyogram (EMG) activities with the different hand grips and arm directions movements. Experiments were conducted to record the EMG of different arm and hand motions and the data were analyzed to decide the contribution of each sensor, in order to distinguish the arm-hand motions as a function of the reaching time. Results showed that it is possible to differentiate hand grips and arm position while doing a reaching and grasping task. Also, these results are of great importance as one step to achieve a close loop dynamical coupling between the user and the prosthesis.

1. INTRODUCTION

Mining information from EMG signals to detect complex motion intention has attracted growing research attention, especially for upper-limb prosthetic hand applications [1]. In previous studies, it has been reported that up to 10 wrist and hand motions could be recognized from 2-3 channels of forearm electromyogram (EMG) [2,3]. Other studies have used non-stationary EMG at the beginning of motion [4] or mechanomyogram (MMG) as the signal source for the motion intention detection [5]. In these studies, motion intention of the wrist and hand were detected using patter recognition techniques from recordings of forearm muscle activities. However, it is difficult to take in consideration the body coordinated motions from these signals alone; therefore the movement of the artificial limb can be unnatural if consider as a part of the whole body, and a dynamical coupling between the person and the prosthesis is not possible. Also, using forearm muscle activities to drive the artificial limb leaves aside the possibility for higher level amputees to use the system.

It has been shown that most daily-life upper limb activities present coordination in the shoulder-arm-hand complex. For example, it has been shown that during grasping and reaching tasks [6-9], or throwing and catching a ball [10], the shoulder, elbow and hands’ trajectory are tightly coupled. Also, it has been discussed that this coupling is also task and situation dependent, such as reaching and grasping an object in different places and in different orientations [11].

It is because of this dependency that research effort had been done to differentiate hand motions using EMG activity of proximal muscles [12-15]. However, it is still complex to classify and interpret the information acquired. C. Martelloni, et al., in [12], used a Support Vector Machine based pattern recognition algorithm as an attempt to predict different grips, trying to reproduce the finding in monkeys described in [14]. They were able to discriminated 3 different grips (palmar, lateral or pinch grip), but they used data obtained from the forearm muscles activities of the flexor carpi radialis and extensor carpi radialis, which have been used in the previous studies to control prosthetic hands [2-5,16]. Therefore, this makes it difficult to determine the contribution the proximal muscles to the motion detection of the hand. Also, in [13], Xiao Hu, et al. compared the performance of a Scalar Autoregresive model with a Multivariate AR modeling for multichannel EMG sensors in order to classify upper arm movements. Using data obtained from the bicep, tricep, deltoid and brachioradialis. They were able to classify accurately different arm movements. Although the results are encouraging, they focus the attention on the contribution of the muscles as a whole, and insights on the contribution of individual muscles is lost. Besides they didn’t attempt to predict hand positions. Additionally, Y. Koike et al., in [15], developed a forward dynamics model of the human arm from EMG reading of proximal muscles using neural networks, but didn’t attempt to predict hand positions.

In this study we wanted to investigate the contribution of the around-shoulder muscle activities to the hand and arm direction movements, in order to explore the possibility of detecting the person’s intentions in a dynamical way (during the reaching process). Experiments were conducted to record the EMG signals from the around-shoulder muscles of different arm and hand motions, and to analyze the contribution of these sites to distinguish different hand and arm motions.

2. METHODS

2.1. Subjects

Four male subjects with age 23.75±2.06 participated in the experiments. They were informed about the experimental procedures and agreed to sign a written consent. All subjects were healthy with no-known history of neurological abnormalities or musculo-skeletical disorders.

2.2. Experimental Setup

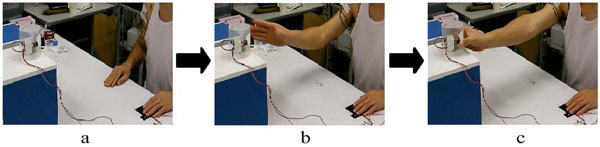

Subjects were ask to seat comfortably in front of a desk and were asked to move their dominant arm from an assigned position towards an object, which had to be grasped (Fig. 1). To start the trial’s reaching motion the subjects had to push a switch button (switch1) with their non-dominant hand, while their dominant hand rested in a natural open flat position on the desk (start position of each trial). After, with their dominant hand they had to reach and grasp the object. Before grasping the object completely the subjects were required to press another switch button (switch 2) in order to finish the trial.

Three different object related grip were used during the experiments, as shown in Fig. (2). Also, these objects related grasps were placed in five different positions relative to the subject, as denoted in Fig. (3). The target objects were placed in order to allow the maximal elbow extension in all five directions (see Fig. 3). Moreover, the height of the chair was regulated for each subject in order to obtain an elbow’s angle of 90° (maintaining the trunk erected). A self-paced speed was allowed for the reaching and grasping tasks.

|

Fig. (2). Grasping tasks. a. Horizontal cylinder grip (g1) b. Vertical cylinder grip (g2) c. Plane surface grip (g3). |

|

Fig. (3). Reaching positions. 5 different reaching position were used in each trial.. |

The subjects were asked to reach each object nine times for every positions, therefore a total of 135 trials were performed by each subject (3 objects X 5 positions X 9 repetitions). The subjects were able to rest for a few seconds between each trial. Also, they were requested not to bend or rotate the trunk in order to prevent translational motions of the shoulder. Finally, they were asked to execute each grip in all different positions.

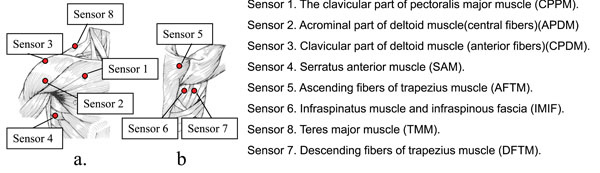

2.3. Devices

Raw EMG signals were recorded at sampling rate of 2.0KHz and stored (using National Instrument’s Labview) for an off-line data analysis using Matlab. After cleaning the skin surface, eight EMG sensors were placed, aiming to record the proximal muscles, as indicated by Fig. (4). Also, disposable solid-gel Ag-AgCl surface electrodes (Biorode SDC-H, GE Yokogawa Medical Systems, Japan) were used. The location of each electrode was chosen according to [13] and preliminary experiments of our research group,

|

Fig. (4). Placement of EMG sensors for this study. |

2.4. Feature Extraction

EMG signals were processed by a 50 Hz high-pass filter in order to suppress the motion-related artifact, then rectified, and filtered by a 2Hz low-pass filter. The EMG signals obtained during the reaching phase were framed between the signals of switch 1 and switch 2. After, the reaching phase was equally divided into 10 segments.

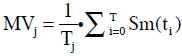

For analysis two features were employed. The first feature was the mean value (MV), which is expressed by equation (1).

|

(1) |

where j corresponds to a segment; Sm(ti) is the preprocessed EMG at ti; T is the size of each segment; and Tj is the starting point the segment. MVj is the mean value for the segment j. The second feature employed for analysis was the peak value of muscle activation (PVM) of each segment. Both MV and PVM were normalized for the further analysis.

2.5. Method of Analysis

For the data analysis a classifier based on K-means was used using the features extracted from all 8 channels. This method calculates the distance between an input vector and the centroid of existing clusters in a segment, then assigns the input vector to the cluster to which the distance is minimum. Then the cluster will be updated to contain the new input. Distance was calculated with the following expression.

|

(2) |

Two kinds of discrimination were tested:

- Discrimination of the object related grip (g1, g2, g3) for each final position (p1, p2, p3, p4, p5). We will refer to this as: disc_i.

- Discrimination of the final position (p1, p2, p3, p4, p5) for each object related grip (g1, g2, g3). This will be referred as: disc_ii.

The segment was considered an effective point if 70% of the grip or position were correctly classified, otherwise it was regarded as an invalid point. Fig. (5a) shows and examples of an effective point, and Fig. (5b) shows the example of an invalid point for the disc_i data. Furthermore, for both discrimination methods there was a total of 20 points.

|

Fig. (5). Examples of an effective and an invalid point of disc_i. a Effective points, b. Invalid Points. |

After the data was classified, a Tukey-Kramer test was performed, as an effective feature selection method, in order to decide each sensor's contribution to the discrimination of position or grips pairs. This will be further explaining in the next section.

3. RESULTS

3.1. Using 8 Features

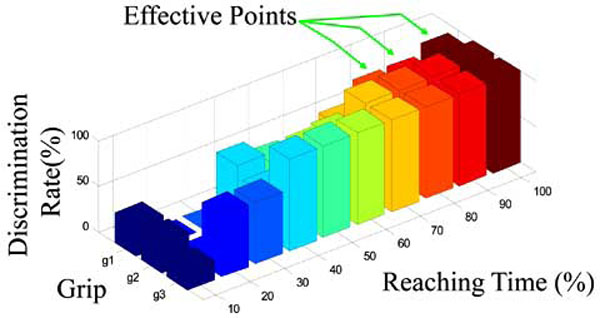

Fig. (6) shows the correct rate discrimination of the positions for the object related grip g2 (disc_i) of subject C, in terms of the reaching time, and using the PVM feature from the 8 EMG channels. The x axis corresponds to the percentage of the reaching time, were 0% corresponded to the moment the first switch was pressed, and 100% when the switch in the object was pressed. Since the reaching time was divided into 10 segments, each point corresponds to one segment. The y axis represents the final reaching position been discriminated, and the z axis corresponds to the correct discriminated rate.

|

Fig. (6). Correct rate discrimination of the positions for the object related grip g2 (disc_i) of subject C, in terms of the reaching time, and using the PVM feature from the 8 EMG channels. |

It can be observed from the figure that the correct discriminated rates for p3 and p4 positions are higher than for the other positions, which was also observed in the other subjects’ data. It’s shown from the figure that at 90% of the reaching time, all the final positions for g2 were recognized with high accuracy. Table 1 shows the correct discriminated rate for the case presented on Fig. (5). Also, Table 2 shows the number of effective points during the trials for all the subjects.

Correct Discriminated Rate for the Case Presented on Fig. (5)

| Reaching Time (%) | Distinguish Rate (%) | ||||

|---|---|---|---|---|---|

| p1 | p2 | p3 | p4 | p5 | |

| 90 | 100 | 78 | 100 | 100 | 78 |

Number of the Effective Points for disc_i

| Subject | Feature Extraction | Grip Type | ||

|---|---|---|---|---|

| g1 | g2 | g3 | ||

| A | MV | 1 | 2 | 3 |

| PMV | 0 | 1 | 4 | |

| B | MV | 0 | 0 | 0 |

| PMV | 0 | 0 | 0 | |

| C | MV | 1 | 1 | 0 |

| PMV | 1 | 0 | 0 | |

| D | MV | 0 | 0 | 0 |

| PMV | 0 | 0 | 0 | |

Fig. (7) shows the correct discrimination rate of the object related grip for position p3 of subject C, in terms of the reaching time and using the MV feature for the 8 EMG channels. Similarly to the figure before, the x axis corresponds to the reaching time segments; the y axis represents the different object related grip (g1, g2, and g3) and the z axis correspond the correct discrimination rate.

|

Fig. (7). Correct discrimination rate of the object related grip for position p3 of subject C, in terms of the reaching time and using the MV feature for the 8 EMG channels. |

It can be noticed from the figure that from 80% of the reaching time effective points were found on the 3 types of grip. The discriminated rate for 80, 90 and 100% of the reaching time can be observed in Table 3. Therefore, for subject C, at 80, 90, and 100% of the reaching time position p3 was recognized with high accuracy.

Correct Discriminated Rate for the Case Presented on Fig. (5)

| Reaching Time (%) | Distinguish Rate (%) | ||

|---|---|---|---|

| g1 | g2 | g3 | |

| 100 | 89 | 100 | 100 |

| 90 | 78 | 100 | 100 |

| 80 | 78 | 100 | 100 |

Also, it was found that the discrimination rate of g3 was higher than that the other grips for all the subjects. Table 4 shows the effective points during the trials for all the subjects.

The Number of the Effective Points for Disc_ii

| Subject | Feature Extraction | Position | ||||

|---|---|---|---|---|---|---|

| p1 | p2 | p3 | p4 | p5 | ||

| A | MV | 0 | 0 | 3 | 0 | 6 |

| PMV | 0 | 3 | 1 | 0 | 3 | |

| B | MV | 0 | 0 | 0 | 2 | 0 |

| PMV | 0 | 1 | 0 | 2 | 0 | |

| C | MV | 3 | 0 | 3 | 0 | 0 |

| PMV | 5 | 2 | 5 | 0 | 0 | |

| D | MV | 4 | 0 | 0 | 0 | 0 |

| PMV | 6 | 0 | 0 | 0 | 0 | |

3.2. Selecting Effective Features

In order to select the most effective features from the data to reduce the data processing load, a Tukey-Kramer test was performed. This method allowed us to know the contribution of each sensor in order to discriminate position pairs. Table 5 shows the results.

The Multiple Comparison for Sensors Contribution to Discrimination

| Directions | Sensor Number | Total_h | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

| p1/p2 | ○ | ○ | ○ | ○ | ○ | 5 | |||

| p1/p3 | ○ | ○ | ○ | ○ | ○ | 5 | |||

| p1/p4 | ○ | ○ | ○ | ○ | ○ | 5 | |||

| p1/p5 | ○ | ○ | ○ | ○ | 4 | ||||

| p2/p3 | ○ | ○ | ○ | ○ | ○ | ○ | ○ | 7 | |

| p2/p4 | ○ | ○ | ○ | ○ | 4 | ||||

| p2/p5 | ○ | 1 | |||||||

| p3/p4 | ○ | ○ | ○ | ○ | ○ | ○ | 6 | ||

| p3/p5 | ○ | ○ | ○ | ○ | ○ | ○ | 6 | ||

| p4/p5 | ○ | ○ | ○ | 3 | |||||

| total_v | 8 | 8 | 4 | 4 | 2 | 6 | 9 | 5 | |

'pi/pj means the discrimination between pi and pj.

O: significant difference. X: no significant difference

The effective features were selected by choosing the direction pairs with smallest amount of significant difference (total_h), which are the most difficult pairs to distinguish. Then the sensors that were able to distinguish the selected pairs (presented in Table 5 as “O”) and provide the discrimination on most of the other pairs was chosen (the total significant discriminations of a sensor is shown in the row “total_v” in Table 5). Different groupings were tested, but the best result was obtained when choosing 4 sensors. Certainly, this method is not optimal for the discrimination in terms of the candidate number and feature combination, however, the general information is sufficient for discrimination of all directions (final positions) could be expected.

Comparisons between the discrimination using the 4 selected features and 8 features for disc_i were made. The effective points whose correct rates were improved are shown in Table 6; the unchanged rates in Table 7; and decreased rates in Table 8. As can be observed, by using the selected features, the discrimination rates of 13 effective points were improved, 10 were unchanged, and only 3 were slightly decreased. This improvement can be noted specially for the discrimination rates of p5, which were lower when using 8 features.

Improved Discrimination Rates when Using the Selected 4 Sensors (disc_i)

| Reaching Time (%) | Grasp | Distinguish Rate (%) | Used Sensor | ||||

|---|---|---|---|---|---|---|---|

| p1 | p2 | p3 | p4 | p5 | |||

| 100 | g1 | 89 | 78(56) | 100 | 100 | 78 | 1,2,7,8 |

| 100 | g3 | 100 | 100 | 100 | 100 | 78(56) | 1,5,6,7 |

| 90 | g2 | 100 | 89 | 100 | 100 | 78(56) | 1,2,7,8 |

| 80 | g2 | 100 | 100 | 100 | 100 | 78(56) | 1,2,7,8 |

| 70 | g2 | 100 | 89 | 100 | 100 | 78(56) | 1,2,7,8 |

| 100 | g2 | 89 | 78 | 100 | 100 | 78(44) | 2,6,7,8 |

| 80 | g2 | 100 | 78 | 100 | 100 | 78(56) | 1,2,6,8 |

| 50 | g3 | 100(56) | 100 | 89 | 100 | 89(67) | 1,2,6,7 |

| 100 | g1 | 100 | 78 | 100 | 100 | 89(56) | 1,4,5,6 |

| 90 | g1 | 100 | 78 | 100 | 100 | 89(56) | 1,4,5,6 |

| 100 | g3 | 78(67) | 78(56) | 78 | 100 | 78 | 1,2,5,8 |

| 90 | g1 | 89(67) | 78 | 89 | 100 | 78 | 1,3,6,8 |

| 70 | g1 | 89(44) | 100 | 89 | 89 | 89 | 3,4,6,8 |

The figure in the bracket shows the correct rate using 8 features.

Unchanged Discrimination Rates when Using the Selected 4 Sensors (disc_i)

| Reaching Time (%) | Grasp | Distinguish Rate (%) | Used Sensor | ||||

|---|---|---|---|---|---|---|---|

| p1 | p2 | p3 | p4 | p5 | |||

| 90 | g3 | 100 | 100 | 100 | 100 | 78 | 1,5,6,7 |

| 80 | g1 | 100 | 78 | 89 | 100 | 78 | 1,2,3,7 |

| 80 | g3 | 100 | 100 | 100 | 100 | 89 | 1,2,6,7 |

| 70 | g3 | 100 | 100 | 100 | 100 | 78 | 1,2,6,8 |

| 60 | g2 | 100 | 89 | 100 | 100 | 78 | 1,2,7,8 |

| 50 | g2 | 100 | 89 | 78 | 100 | 89 | 2,6,7,8 |

| 90 | g2 | 100 | 100 | 100 | 100 | 100 | 1,2,7,8 |

| 60 | g3 | 89 | 78 | 89 | 89 | 78 | 1,4,6,8 |

The figure in the bracket shows the correct rate using 8 features.

Through a similar comparison for the disc_ii, the same tendency could be observed. The number of the effective points whose discrimination rates were improved, unchanged, decreased, is 34, 44 and 6, respectively. This clearly denotes the improvement of the discrimination rates by selecting appropriately it’s features.

Finally, Table 9 shows the effective points frequency of appearance on each sensor.

Decreased Discrimination Rates when Using the Selected 4 Sensors for (disc_i)

| Reaching Time(%) | Grasp | Distinguish Rate (%) | Used Sensor | ||||

|---|---|---|---|---|---|---|---|

| p1 | p2 | p3 | p4 | p5 | |||

| 70 | g3 | 100 | 100 | 100 | 67(89) | 78 | 1,2,6,7 |

| 40 | g3 | 89 | 89 | 100 | 100 | 67(89) | 1,2,6,8 |

| 30 | g3 | 67(78) | 100 | 89 | 100 | 78 | 1,5,6,8 |

The number in the bracket shows the discimination rate using 8 features.

Effective Points Frequency of Appearance in Each Sensor

| Sensor ID | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Frequency | 73 | 52 | 14 | 38 | 31 | 42 | 52 | 48 |

4. DISCUSSION

The rapid development of light weighted dexterous prosthetic hand its needing more research on new data mining methods in order to detect dynamical motion intention. New techniques and algorithms will allow taking full advantage of the many degrees of freedom of these artificial limbs, and hopefully will allow the user to acquire more natural and intuitive control and manipulation of the prosthesis. Different data mining methods have been used to extract and predict information from EMG sensors, being neural networks the most commonly used [1-3, 12-16]. In [17], R. Ashan et al. made a review on the different types of classifiers used until this day for EMG extraction for Human Computer Interaction applications. They conclude that the use of neural networks dominates for these applications, but point out also the advantages of other methods. In [15] a three-layer neural network, using the Kick Out method, was applied. They were able to develop a model which relates the motor commands to movement trajectories of the arm. Also, in [13], a statistical approach was used in order to model the arm motions. In this case a multivariate analysis of the EMG information approach was used in order to take advantage of the correlation of the EMG activities when making arm movements. The results of these studies show that it is possible to extract arm dynamics information from activities of the proximal muscles. Therefore, this results are encouraging to go further and try to extract information that will allow prediction not only arm, but hand trajectories in reaching and grasping tasks.

T. Brochier et al., in [14], explore different muscle EMG activities in object-specific grasp in Macaque Monkeys, and J. Martellon, in [12], reproduced the experiment for humans. They were able to classify 3 different grips using pattern recognition algorithms, but along the proximal muscles, they also used forearm muscle activities, making it difficult to determine the contribution of the proximal muscles to the recognition.

The present study aimed to explore the contribution of the EMG signals of the proximal muscles, more specifically the around-shoulder muscles, to the activities of the arm-hand dynamics. This way we can use this information to predict the type of grip and arm position, while reaching, in a dynamic way. The results showed that it is possible to distinguish the final position and the final grip with a simple classifier around 80% of the reaching time, suggesting that using more complex algorithms this rate could be improved. For example, even when reducing the amount of sensors used to discriminate the position and grips, the results were very promising. Certainly, the classification method used was not optimal, since it leaves aside several important components and it is too slow for actual applications, but it indicates the possibility of using around shoulder muscles to predict hand and arms movements in a dynamical way.

The sensor sites 1, 2, 3, 5 (refer to Fig. 4) were also employed in [12, 13, 15]. However, through the comparison, it is clear that the muscles: infraspinatus muscle and infraspinatus fascia (6), teres major muscle (7), and descending fibers of the trapezius muscle (8); also play important roles in discriminating arm- hand motions.

These findings are of importance to achieve a dynamical coupling between the person and the machine. In our research group, we are currently trying to achieve this coupling by using a close loop control of the artificial limb. Therefore, not only an accurate real time intention detection of the hand and arm trajectory is needed, but a different redundant feedback channels are needed for the user to be able to notice whether the prosthetic hand is doing what he intends to achieve [18], thus the user can have a dynamical correlation of the intention-action coupling of the reaching process with the prosthesis. This way we hope to achieve body-prosthesis coordination for more natural manipulation of the artificial limb when reaching and grasping in daily live activities, and to reduce the amount of consciousness awareness in the process.

Currently, we are in the processes of classifying EMG signals from the around-shoulder muscles using a back propagation neural network, with impressive results.

5. CONCLUSIONS AND FUTURE DIRECTIONS

This study shows that it is possible to distinguish different arm direction motions and hand grips, using EMG signals from the around-shoulder muscles activities when reaching and grasping objects. Moreover, not only pure shoulder muscles contribute to the discrimination, but other proximal muscles contribute as well because the motion coordination of whole body.

As future approach, other classification algorithms will be tested (such as neural networks) in order to achieve real time discrimination of the arm-hand motions. As well, different types of sensors (such as accelerometers) will be used to improve the detection and feature extraction of the muscle activities.

ACKNOWLEDGEMENTS

This work was supported in part by the Ministry of Education, Science, Sports and Culture, Grant-in-Aid for Scientific Research (B), 2007, 19300199